Development Update 9: Massive speedups!

Compatibility improvements and fixed rendering glitches aside: What good is an emulator if everything is a slideshow? I promised to tackle the framerate issues a while ago, and the first major step towards doing so has now been completed: An AArch64 JIT.

This may come as a surprise, but I actually started focusing to work on this major feature back in December already, and quietly implemented feature by feature. A proper JIT requires a lot of infrastructure until it starts giving the desired speed benefits, and I didn't want to weaken the hype by announcing it in a preliminary state. Now that the pieces have fallen together, let's see what the JIT has in store for us!

IR Generator

At a whopping 2000 lines of code, this is the heart of the JIT: It turns ARM assembly code into an Intermediate Representation (IR) which is later compiled down to AArch64 code (or x86 on the internal development version). Mikage uses LLVM IR for this purpose, since that allows us to leverage all existing, industry-proven infrastructure around the LLVM project.

We are off to a good start: The instructions already covered by translation are the simple arithmetic ones (mov, add, ...), memory accesses (ldr, pop, ...), branches (bl, bx, ...), and some floating point operations (vneg, vmul, ...).

On top of that, there is a Thumb layer that takes care of converting Thumb instruction into something the IR generator understands. That saves us from having to translate two instruction sets at basically no cost!

There are many ARM instructions not yet supported by this IR generator, but luckily that need not prevent us from achieving good results.

Inline Interpreter Fallback

One critical metric for good JIT performance is the length of blocks, i.e. groups of instructions that are translated and later executed in one go. If your blocks are very short (few instructions), the overhead of translation and of the generated function prologues/epilogues will be larger than if you'd run the interpreter.

Considering the sparse set of instructions translated currently, this is a real problem, and I didn't want to delay the release of the JIT just because it'd run too slow without implementing the entire ARM instruction set.

There is a good solution though: Whenever the JIT hits an unknown instruction, a fallback to the interpreter core is emitted right inside the block. This allows translation to just continue afterwards without costly context switches!

A prerequisite for this approach is that the interpreter fallback may never do any control flow. Imagine the instruction branched to another address, yet what follows in the emitted block still refers to the old point of execution! So the compromise was to implement all control flow instructions in the JIT before this approach could be implemented, including lesser obvious ones such as `mov pc, r1` or `add pc, 40`. Luckily, that work has been done now and the interpreter fallback works superbly.

Analysis Prepass

Even with large JIT blocks, there is a big problem: Compilation latency. Each block is compiled individually, which for most games could lead to thousands of separate JIT invocations during bootup alone!

To avoid this issue, Mikage performs control flow analysis before invoking the JIT. It goes through all ARM instructions in the block it's about to translate, gathers a list of all branches to other blocks, and repeats the process for those blocks too. This gives the JIT gets a very clear picture about how blocks are connected and hence it can compile them all in one go. This is a massive improvement - instead of way too many separate translation processes, you'll just get a single one that compiles thousands of blocks at once.

This approach also has a few nice side benefits: For one, jumps from one block to another (such as loops, conditionals, etc) can now be translated into plain branches in the generated assembly code, where previously it needed to be an expensive function call. Furthermore, the backend can now perform control-flow optimizations such as inlining. The result of these two is the same: Much shorter and faster output assembly, and hence better performance!

Background compilation

Alas, while grouping blocks reduced total latency. It worsed perceived latency a lot: After all, compiling 8000 blocks at once takes a while! You wouldn't want to wait half a minute to even wait for the loading logo of your game. Something had to be done about this.

Luckily, Mikage already has a CPU engine that does not suffer from latency issues at all: The interpreter. Of course though, the whole reason I'm working on the JIT is that the interpreter is too slow. But maybe we can have the best of two worlds?

Indeed we can! When execution hits an uncompiled block, Mikage won't immediately translate it. Instead, it queues the block start address for translation on a background thread. It doesn't really care how long that thread needs to compile the block: It temporarily uses the interpreter until execution hits a block that has already been compiled. This allows execution to continue even though the JIT is still busy.

During bootup, this means you'll notice things running significantly slower, but it's much better than the complete standstill you'd have seen before. More importantly during gameplay, you can barely notice any compilation latency at all anymore.

Conditional Flags Management

The ARM instruction set supports conditionally executing functions based on the results of a previous operation. For instance, an error handling function might be conditionally called depending on whether an error code compares equal to 0 or not. Emulating this naively implied having to decode and re-encode the conditional execution flags ("NZCV") after every instruction that potentially touched them.

Suffice to say, this caused the generated assembly code to blow up quite a bit. Instead, each of the flags is managed by a dedicated IR variable now, all of which are initialized on function entry and encoded back to the NZCV flags on function exit.

Constant Read Optimization

Memory accesses are complicated: Due to virtual memory, you can't just translate data writes in the emulated CPU to writes in the generated assembly code. Implementing the entire virtual address translation logic in assembly would be very laborious, so instead Mikage's JIT replaces memory accesses with calls to a C++ function that deals with them.

That can be very slow though; each call to C++ is a costly context switch, where the host CPU state has to be saved to memory and restored after, since the JIT can't know which registers the C++ function might modify. This is doubly concerning considering that any access to constants requires a read from memory. To reduce the cost of this effect, ReadMemory32 calls are optimized in a post-processing phase: If the read address is known to be in read-only memory, the JIT can conveniently bake the value at that address right into the generated IR instead of emitting a full-blown ReadMemory32 function call.

First Results

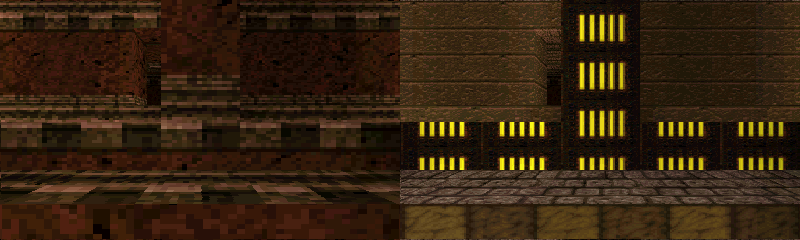

The video above shows before/after footage for yeti3DS, my primary benchmark for the JIT work. This may be somewhat counter-intuitive: Why optimize simple homebrew applications when proper games struggle most? The reason is that yeti3DS specifically is a very CPU-intense application since its 3D graphics are rendered without the GPU. This means CPU-side speedups translate almost equivalently into speedups in the entire emulation, without the GPU slowing us down.

And it shows: yeti3DS used to be a slideshow, running at few frames per second. One 650% improvement later we are at 50-60!

Official games of course also see a speedup, but are quickly limited by GPU emulation now. Still, Retro City Rampage DX sees a significant framerate improvement of 60%.

Future Prospects

Hopefully this writeup gives you a good overview of the problems I've faced while working on the JIT. With the ground work we're in a good position for further speedups, which we'll see once the IR generator covers the ARM instruction set more comprehensively (especially in terms of floating point math).

Some elements, such as the background compilation, also give Mikage an edge over Citra: If you ever tried out any of the unofficial Citra builds with a JIT, you'll notice very heavy microstuttering. Background compilation eliminates that issue from the picture.

For the next month, I'm working on optimizing some of the bottlenecks in GPU emulation such as the texture cache. Stay tuned!